Introduction

Expectations and conventions

This course assumes that the reader has basic familiarity with C (especially number types, arithmetic operations, string literals and stack vs heap). It will thus not explain concepts which are rigorously identical between Rust and C for the sake of concision. If this is not your case, feel free to ask the teacher about any surprising construct in the course’s material.

We will also compare Rust with C++ where they differ, so that readers familiar with C++ can get a good picture of Rust specificities. But previous knowledge of C++ should not be necessary to get a good understanding of Rust via this course.

Finally, we will make heavy use of “C/++” abbreviation as a shorter alternative to “C and C++” when discussing common properties of C and C++, and how they compare to Rust.

Intended workflow

Welcome to this practical about writing high performance computing in Rust!

You should have been granted access to a browser-based version of Visual Studio Code, where everything needed for the practical is installed. The intended workflow is for you to have this document opened in one browser tab, and the code editor opened in another tab (or even two browser windows side by side if your computer’s screen allows for it).

After performing basic Visual Studio Code configuration, I advise opening a

terminal, which you can do by showing the bottom pane of the code editor using

the Ctrl+J keyboard shortcut.

You would then go to the exercises directory if you’re not already there…

cd ~/exercises

And, when you’re ready to do the exercises, start running cargo run with the

options specified by the corresponding course material.

The exercises are based on code examples that are purposely incorrect.

Therefore, any code example within the provided exercises Rust project, except

for 00-hello.rs, will either fail to compile or fail to run to completion. A

TODO code comment or … symbol will indicate where failures are expected, and

your goal in the exercises will be to modify the code in such a way that the

associated example will compile and run. For runtime failures, you should not

need to change the failing assertion, instead you will need to change other code

such that the assertion passes.

If you encounter any failure which does not seem expected, or if you otherwise get stuck, please call the teacher for guidance!

Now, let’s get started with actual Rust code. You can move to the next page, or any other page within the course for that matter, through the following means:

- Left and right keyboard arrow keys will switch to the previous/next page. Equivalently, arrow buttons will be displayed at the end of each page, doing the same thing.

- There is a menu on the left (not shown by default on small screen, use the top-left button to show it) that allows you to quickly jump to any page of the course. Note, however, that the course material is designed to be read in order.

- With the magnifying glass icon in the top-left corner, or the “S” keyboard shortcut, you can open a search bar that lets you look up content by keywords.

Running the course environment locally

After the school, if you want to get back to this course, you will be able to

run the same development environment on your machine by installing podman or

docker and running the following command:

# Replace "podman" with "docker" if you prefer to use docker

podman run -p 8080:8080 --rm -it gitlab-registry.in2p3.fr/grasland/grayscott-with-rust/rust_code_server

You will then be able to connect to the environement by opening the

http://127.0.0.1:8080 URL in your web browser, and typing in the password that

is displayed at the beginning of the console output of podman run.

Please refrain from doing so during the school, however:

- These container images are designed to please HPC sysadmins who are unhappy with internet downloads from compute nodes. Therefore they bundle everything that may be possibly needed during the course, and are quite heavyweight as a result. If everyone attempts to download them at once at the beginning of the course, it may saturate the building’s or CCIN2P3’s internet connection and therefore disrupt the classes.

- Making your local GPUs work inside of the course’s container can require a fair amount of work, especially if you use NVidia GPUs, whose driver is architected in a manner that is fundamentally hostile to Linux containers. Your local organizers will have done their best to make it work for you during the school, saving you a lot of time. Without such support, a CPU emulation will be used, so the GPU examples will still work but run very slowly.

As for Apptainer/Singularity, although it is reasonably compatible with Docker/Podman container images, it actually does many things very differently from other container engines as far as running containers is concerned. Therefore, it must be run in a very exotic configuration for the course environment to work as expected. Please check out the course repository’s README for details.

First steps

Welcome to Rust computing. This chapter will be a bit longer than the next ones, because we need to introduce a number of basic concepts that you will likely all need to do subsequent exercises. Please read on carefully!

Hello world

Following an ancient tradition, let us start by displaying some text on stdout:

fn main() { println!("Hello world!"); }

Notice the following:

- In Rust, functions declarations start with the

fnkeyword. - Like in C/++, the main function of a program is called

main. - It is legal to return nothing from

main. Likereturn 0;in C/++, it indicates success. - Sending a line of text to standard output can be done using the

println!()macro. We’ll get back to why it is a macro later, but in a nutshell it allows controlling the formatting of values in a way that is similar toprintf()in C and f-strings in Python.

Variables

Rust is in many ways a hybrid of programming languages from the C/++ family and the ML family (Ocaml, Haskell). Following the latter’s tradition, it uses a variable declaration syntax that is very reminiscent of mathematical notation:

#![allow(unused)] fn main() { let x = 4; }

As in mathematics, variables are immutable by default, so the following code does not compile:

#![allow(unused)] fn main() { let x = 4; x += 2; // ERROR: Can't modify non-mut variable }

If you want to modify a variable, you must make it mutable by adding a mut

keyword after let:

#![allow(unused)] fn main() { let mut x = 4; x += 2; // Fine, variable is declared mutable }

This design gently nudges you towards using immutable variables for most things, as in mathematics, which tends to make code easier to reason about.

Alternatively, Rust allows for variable shadowing, so you are allowed to define a new variable with the same name as a previously declared variable, even if it has a different type:

#![allow(unused)] fn main() { let foo = 123; // Here "foo" is an integer let foo = "456"; // Now "foo" is a string and old integer "foo" is not reachable }

This pattern is commonly used in scenarios like parsing where the old value should not be needed after the new one has been declared. It is otherwise a bit controversial, and can make code harder to read, so please don’t abuse this possibility.

Type inference

What gets inferred

Rust is a strongly typed language like C++, yet you may have noticed that the variable declarations above contain no types. That’s because the language supports type inference as a core feature: the compiler can automatically determine the type of variables based on various information.

-

First, the value that is affected to the variable may have an unambiguous type. For example, string literals in Rust are always of type

&str(“reference-to-string”), so the compiler knows that the following variablesmust be of type&str:#![allow(unused)] fn main() { let s = "a string literal of type &str"; } -

Second, the way a variable is used after declaration may give its type away. If you use a variable in a context where a value of type

Tis expected, then that variable must be of typeT.For example, Rust provides a heap-allocated variable-sized array type called

Vec(similar tostd::vectorin C++), whose length is defined to be of typeusize(similar tosize_tin C/++). Therefore, if you use an integer as the length of aVec, the compiler knows that this integer must be of typeusize:#![allow(unused)] fn main() { let len = 7; // This integer variable must be of type usize... let v = vec![4.2; len]; // ...because it's used as the length of a Vec here. // (we'll introduce this vec![] macro later on) } -

Finally, numeric literals can be annotated to force them to be of a specific type. For example, the literal

42i8is a 8-bit signed integer, the literal666u64is a 64-bit unsigned integer, and the12.34f32literal is a 32-bit (“single precision”) IEEE-754 floating point number. By this logic, the following variablexis a 16-bit unsigned integer:#![allow(unused)] fn main() { let x = 999u16; }If none of the above rules apply, then by default, integer literals will be inferred to be of type

i32(32-bit signed integer), and floating-point literals will be inferred to be of typef64(double-precision floating-point number), as in C/++. This ensures that simple programs compile without requiring type annotations.Unfortunately, this fallback rule is not very reliable, as there are a number of common code patterns that will not trigger it, typically involving some form of genericity.

What does not get inferred

There are cases where these three rules will not be enough to determine a variable’s type. This happens in the presence of generic type and functions.

Getting back to the Vec type, for example, it is actually a generic type

Vec<T> where T can be almost any Rust type1. As with std::vector in

C++, you can have a Vec<i32>, a Vec<f32>, or even a Vec<MyStruct> where

MyStruct is a data structure that you have defined yourself.

This means that if you declare empty vectors like this…

#![allow(unused)] fn main() { // The following syntaxes are strictly equivalent. Neither compile. See below. let empty_v1 = Vec::new(); let empty_v2 = vec![]; }

…the compiler has no way to know what kind of Vec you are dealing with. This

cannot be allowed because the properties of a generic type like Vec<T> heavily

depend on what concrete T parameter it’s instantiated with, therefore the

above code does not compile.

In that case, you can enforce a specific variable type using type ascription:

#![allow(unused)] fn main() { // The compiler knows this is a Vec<bool> because we said so let empty_vb: Vec<bool> = Vec::new(); }

Inferring most things is the idiom

If you are coming from another programming language where type inference is either not provided, or very hard to reason about as in C++, you may be tempted to use type ascription to give an explicit type to every single variable. But I would advise resisting this temptation for a few reasons:

- Rust type inference rules are much simpler than those of C++. It only takes a small amount of time to “get them in your head”, and once you do, you will get more concise code that is less focused on pleasing the type system and more on performing the task at hand.

- Doing so is the idiomatic style in the Rust ecosystem. If you don’t follow it, your code will look odd to other Rust developers, and you will have a harder time reading code written by other Rust developers.

- If you have any question about inferred types in your program, Rust comes with excellent IDE support, so it is very easy to configure your code editor so that it displays inferred types, either all the time or on mouse hover.

But of course, there are limits to this approach. If every single type in the program was inferred, then a small change somewhere in the implementation your program could non-locally change the type of many other variables in the program, or even in client code, resulting in accidental API breakages, as commonly seen in dynamically typed programming language.

For this reason, Rust will not let you use type inference in entities that may appear in public APIs, like function signatures or struct declarations. This means that in Rust code, type inference will be restricted to the boundaries of a single function’s implementation. Which makes it more reliable and easier to reason about, as long as you do not write huge functions.

Back to println!()

With variable declarations out of the way, let’s go back to our hello world example and investigate the Rust text formatting macro in more details.

Remember that at the beginning of this chapter, we wrote this hello world statement:

#![allow(unused)] fn main() { println!("Hello world!"); }

This program called the println macro with a string literal as an argument.

Which resulted in that string being written to the program’s standard output,

followed by a line feed.

If all we could pass to println was a string literal, however, it wouldn’t

need to be a macro. It would just be a regular function.

But like f-strings in Python, the println provides a variety of text

formatting operations, accessible via curly braces. For example, you can

interpolate variables from the outer scope…

#![allow(unused)] fn main() { let x = 4; // Will print "x is 4" println!("x is {x}"); }

…pass extra arguments in a style similar to printf in C…

#![allow(unused)] fn main() { let s = "string"; println!("s is {}", s); }

…and name arguments so that they are easier to identify in complex usage.

#![allow(unused)] fn main() { println!("x is {x} and y is {y}. Their sum is {x} + {y} = {sum}", x = 4, y = 5, sum = 4 + 5); }

You can also control how these arguments are converted to strings, using a mini-language that is described in the documentation of the std::fmt module from the standard library.

For example, you can enforce that floating-point numbers are displayed with a certain number of decimal digits:

#![allow(unused)] fn main() { let x = 4.2; // Will print "x is 4.200000" println!("x is {x:.6}"); }

println!() is part of a larger family of text formatting and text I/O macros

that includes…

print!(), which differs fromprintln!()by not adding a trailing newline at the end. Beware that since stdout is line buffered, this will result in no visible output until the nextprintln!(), unless the text that you are printing contains the\nline feed character.eprint!()andeprintln!(), which work likeprint!()andprintln!()but write their output to stderr instead of stdout.write!()andwriteln!(), which take a byte or text output stream2 as an extra argument and write down the specified text there. This is the same idea asfprintf()in C.format!(), which does not write the output string to any I/O stream, but instead builds a heap-allocatedStringcontaining it for later use.

All of these macros use the same format string mini-language as println!(),

although their semantics differ. For example, write!() takes an extra output

stream arguments, and returns a Result to account for the possibility of I/O

errors. Since these errors are rare on stdout and stderr, they are just treated

as fatal by the print!() family, keeping the code that uses them simpler.

From Display to Debug

So far, we have been printing simple numerical types. What they have in common is that there is a single, universally agreed upon way to display them, modulo formatting options. So the Rust standard library can afford to incorporate this display logic into its stability guarantees.

But some other types are in a muddier situation. For example, take the Vec

dynamically-sized array type. You may think that something like “[1, 2, 3, 4,

5]” would be a valid way to display an array containing the numbers containing

numbers from 1 to 5. But what happens when the array contains billions of

numbers? Should we attempt to display all of them, drowning the user’s terminal

in endless noise and slowing down the application to a crawl? Or should we

summarize the display in some way like numpy does in Python?

There is no single right answer to this kind of question, and attempting to account for all use cases would bloat up Rust’s text formatting mini-language very quickly. So instead, Rust does not provide a standard text display for these types, and therefore the following code does not compile:

#![allow(unused)] fn main() { // ERROR: Type Vec does not implement Display println!("{}", vec![1, 2, 3, 4, 5]); }

All this is fine and good, but we all know that in real-world programming, it is very convenient during program debugging to have a way to exhaustively display the contents of a variable. Unlike C++, Rust acknowledges this need by distinguishing two different ways to translate a typed value to text:

- The

Displaytrait provides, for a limited set of types, an “official” value-to-text translation logic that should be fairly consensual, general-purpose, suitable for end-user consumption, and can be covered by library stability guarantees. - The

Debugtrait provides, for almost every type, a dumber value-to-text translation logic which prints out the entire contents of the variable, including things which are considered implementation details and subjected to change. It is purely meant for developer use, and showing debug strings to end users is somewhat frowned upon, although they are tolerated in developer-targeted output like logs or error messages.

As you may guess, although Vec does not implement the Display operation, it

does implement Debug, and in the mini-language of println!() et al, you can

access this alternate Debug logic using the ? formatting option:

#![allow(unused)] fn main() { println!("{:?}", vec![1, 2, 3, 4, 5]); }

As a more interesting example, strings implement both Display and Debug. The

Display variant displays the text as-is, while the Debug logic displays it

as you would type it in a program, with quotes around it and escape sequences

for things like line feeds and tab stops:

#![allow(unused)] fn main() { let s = "This is a \"string\".\nWell, actually more of an str."; println!("String display: {s}"); println!("String debug: {s:?}"); }

Both Display and Debug additionally support an alternate display mode,

accessible via the # formatting option. For composite types like Vec, this

has the effect of switching to a multi-line display (one line feed after each

inner value), which can be more readable for complex values:

#![allow(unused)] fn main() { let v = vec![1, 2, 3, 4, 5]; println!("Normal debug: {v:?}"); println!("Alternate debug: {v:#?}"); }

For simpler types like integers, this may have no effect. It’s ultimately up to

implementations of Display and Debug to decide what this formatting option

does, although staying close to the standard library’s convention is obviously

strongly advised.

Finally, for an even smoother printout-based debugging experience, you can use

the dbg!() macro. It takes an expression as input and prints out…

- Where you are in the program (source file, line, column).

- What is the expression that was passed to

dbg, in un-evaluated form (e.g.dbg!(2 * x)would literally print “2 * x”). - What is the result of evaluating this expression, with alternate debug formatting.

…then the result is re-emitted as an output, so that the program can keep

using it. This makes it easy to annotate existing code with dbg!() macros,

with minimal disruption:

#![allow(unused)] fn main() { let y = 3 * dbg!(4 + 2); println!("{y}"); }

Assertions

With debug formatting covered, we are now ready to cover the last major component of the exercises, namely assertions.

Rust has an assert!() macro which can be used similarly to the C/++ macro of

the same name: make sure that a condition that should always be true if the code

is correct, is indeed true. If the condition is not true, the thread will panic.

This is a process analogous to throwing a C++ exception, which in simple cases

will just kill the program.

#![allow(unused)] fn main() { assert!(1 == 1); // This will have no user-visible effect assert!(2 == 3); // This will trigger a panic, crashing the program }

There are, however, a fair number of differences between C/++ and Rust assertions:

-

Although well-intentioned, the C/++ practice of only checking assertions in debug builds has proven to be tracherous in practice. Therefore, most Rust assertions are checked in all builds. When the runtime cost of checking an assertion in release builds proves unacceptable, you can use

debug_assert!()instead, for assertions which are only checked in debug builds. -

Rust assertions do not abort the process in the default compiler configuration. Cleanup code will still run, so e.g. files and network conncections will be closed properly, reducing system state corruption in the event of a crash. Also, unlike unhandled C++ exceptions, Rust panics make it trivial to get a stack trace at the point of assertion failure by setting the

RUST_BACKTRACEenvironment variable to 1. -

Rust assertions allow you to customize the error message that is displayed in the event of a failure, using the same formatting mini-language as

println!():#![allow(unused)] fn main() { let sonny = "dead"; assert!(sonny == "alive", "Look how they massacred my boy :( Why is Sonny {}?", sonny); }

Finally, one common case for wanting custom error messages in C++ is when

checking two variables for equality. If they are not equal, you will usually

want to know what are their actual values. In Rust, this is natively supported

by the assert_eq!() and assert_ne!(), which respectively check that two

values are equal or not equal.

If the comparison fails, the two values being compared will be printed out with Debug formatting.

#![allow(unused)] fn main() { assert_eq!(2 + 2, 5, "Don't you dare make Big Brother angry! >:("); }

Exercise

Now, go to your code editor, open the examples/01-let-it-be.rs source

file, and address the TODOs in it. The code should compile and runs successfully

at the end.

To attempt to compile and run the file after making corrections, you may use the following command in the VSCode terminal:

cargo run --example 01-let-it-be

…with the exception of dynamic-sized types, an advanced topic which we cannot afford to cover during this short course. Ask the teacher if you really want to know ;)

In Rust’s abstraction vocabulary, text can be written to implementations

of one of the std::io::Write and std::fmt::Write traits. We will

discuss traits much later in this course, but for now you can think of a

trait as a set of functions and properties that is shared by multiple

types, allowing for type-generic code. The distinction between io::Write

and fmt::Write is that io::Write is byte-oriented and fmt::Write

latter is text-oriented. We need this distinction because not every byte

stream is a valid text stream in UTF-8.

Numbers

Since this is a numerical computing course, a large share of the material will be dedicated to the manipulation of numbers (especially floating-point ones). It is therefore essential that you get a good grasp of how numerical data works in Rust. Which is the purpose of this chapter.

Primitive types

We have previously mentioned some of Rust’s primitive numerical types. Here is the current list:

u8,u16,u32,u64andu128are fixed-size unsigned integer types. The number indicates their storage width in bits.usizeis an unsigned integer type suitable for storing the size of an object in memory. Its size varies from one computer to another : it is 64-bit wide on most computers, but can be as narrow as 16-bit on some embedded platform.i8,i16,i32,i64,i128andisizeare signed versions of the above integer types.f32andf64as the single-precision and double-precision IEEE-754 floating-point types.

This list is likely to slowly expand in the future, for example there are

proposals for adding f16 and f128 to this list (representing IEEE-754

half-precision and quad-precision floating point types respectively). But for

now, these types can only be manipulated via third-party libraries.

Literals

As you have seen, Rust’s integer and floating-point literals look very similar

to those of C/++. There are a few minor differences, for example the

quality-of-life feature to put some space between digits of large numbers uses

the _ character instead of '…

#![allow(unused)] fn main() { println!("A big number: {}", 123_456_789); }

…but the major difference, by far, is that literals are not typed in Rust. Their type is almost always inferred based on the context in which they are used. And therefore…

- In Rust, you rarely need typing suffixes to prevent the compiler from truncating your large integers, as is the norm in C/++.

- Performance traps linked to floating point literals being treated as double precision when you actually want single precision computations are much less common.

Part of the reason why type inference works so well in Rust is that unlike C/++, Rust has no implicit conversions.

Conversions

In C/++, every time one performs arithmetic or assigns values to variables, the compiler will silently insert conversions between number types as needed to get the code to compile. This is problematic for two reasons:

- “Narrowing” conversions from types with many bits to types with few bits can lose important information, and thus produce wrong results.

- “Promoting” conversions from types with few bits to types with many bits can result in computations being performed with excessive precision, at a performance cost, only for the hard-earned extra result bits to be discarded during the final variable affectation step.

If we were to nonetheless apply this notion in a Rust context, there would be a third Rust-specific problem, which is that implicit conversions would break the type inference of numerical literals in all but the simplest cases. If you can pass variables of any numerical types to functions accepting any other numerical type, then the compiler’s type inference cannot know what is the numerical literal type that you actually intended to use. This would greatly limit type inference effectiveness.

For all these reasons, Rust does not allow for implicit type conversions. A

variable of type i8 can only accept values of type i8, a variable of type

f32 can only accept values of type f32, and so on.

If you want C-style conversions, the simplest way is to use as casts:

#![allow(unused)] fn main() { let x = 4.2f32 as i32; }

As many Rust programmers were unhappy with the lossy nature of these casts, fancier conversions with stronger guarantees (e.g. only work if no information is lost, report an error if overflow occurs) have slowly been made available. But we probably won’t have the time to cover them in this course.

Arithmetic

The syntax of Rust arithmetic is generally speaking very similar to that of

C/++, with a few minor exceptions like ! replacing ~ for integer bitwise

NOT. But the rules for actually using these operators are quite different.

For the same reason that implicit conversions are not supported, mixed

arithmetic between multiple numerical types is not usually supported in Rust

either. This will often be a pain points for people used to the C/++ way, as it

means that classic C numerical expressions like 4.2 / 2 are invalid and will

not compile. Instead, you will need to get used to writing 4.2 / 2.0.

On the flip side, Rust tries harder than C/++ to handler incorrect arithmetic operations in a sensible manner. In C/++, two basic strategies are used:

- Some operations, like overflowing unsigned integers or assigning the 123456 literal to an 8-bit integer variable, silently produce results that violate mathematical intuition.

- Other operations, like overflowing signed integers or casting floating-point NaNs and infinities to integers, result in undefined behavior. This gives the compiler and CPU license to trash your entire program (not just the function that contains the faulty instruction) in unpredictable ways.

As you may guess by the fact that signed and unsigned integer operations are treated differently, it is quite hard to guess which strategy is being used, even though one is obviously a lot more dangerous than the other.

But due to the performance impact of checking for arithmetic errors at runtime, Rust cannot systematically do so and remain performance-competitive with C/++. So a distinction is made between debug and release builds:

- In debug builds, invalid arithmetic stops the program using panics. You can think of a panic as something akin to a C++ exception, but which you are not encouraged to recover from.

- In release builds, invalid arithmetic silently produces wrong results, but never causes undefined behavior.

As one size does not fit all, individual integer and floating-point types also

provide methods which re-implement the arithmetic operator with different

semantics. For example, the saturating_add() method of integer types handle

addition overflow and underflow by returning the maximal or minimal value of the

integer type of interest, respectively:

#![allow(unused)] fn main() { println!("{}", 42u8.saturating_add(240)); // Prints 255 println!("{}", (-40i8).saturating_add(-100)); // Prints -128 }

Methods

In Rust, unlike in C++, any type can have methods, not just class-like types. As a result, most of the mathematical functions that are provided as free functions in the C and C++ mathematical libraries are provided as methods of the corresponding types in Rust:

#![allow(unused)] fn main() { let x = 1.2f32; let y = 3.4f32; let basic_hypot = (x.powi(2) + y.powi(2)).sqrt(); }

Depending on which operation you are looking at, the effectiveness of this

design choice varies. On one hand, it works great for operations which are

normally written on the right hand side in mathematics, like raising a number to

a certain power. And it allows you to access mathematical operations with less

module imports. On the other hand, it looks decidedly odd and Java-like for

operations which are normally written in prefix notation in mathematics, like

sin() and cos().

If you have a hard time getting used to it, note that prefix notation can be

quite easily implemented as a library, see for example

prefix-num-ops.

The set of operations that Rust provides on primitive types is also a fair bit broader than that provided by C/++, covering many operations which are traditionally only available via compiler intrinsics or third-party libraries in other languages. Although to C++’s credit, it must be said that the situation has, in a rare turn of events, actually been improved by newer standard revisions.

To know which operations are available via methods, just check the appropriate pages from the standard library’s documentation.

Exercise

Now, go to your code editor, open the examples/02-numerology.rs source file,

and address the TODOs in it. The code should compile and runs successfully at

the end.

To attempt to compile and run the file after making corrections, you may use the following command in the VSCode terminal:

cargo run --example 02-numerology

Loops and arrays

As a result of this course being time-constrained, we do not have the luxury of deep-diving into Rust possibilities like a full Rust course would. Instead, we will be focusing on the minimal set of building blocks that you need in order to do numerical computing in Rust.

We’ve covered variables and basic debugging tools in the first chapter, and we’ve covered integer and floating-point arithmetic in the second chapter. Now it’s time for the last major language-level component of numerical computations: loops, arrays, and other iterable constructs.

Range-based loop

The basic syntax for looping over a range of integers is simple enough:

#![allow(unused)] fn main() { for i in 0..10 { println!("i = {i}"); } }

Following an old tradition, ranges based on the .. syntax are left inclusive

and right exclusive, i.e. the left element is included, but the right element is

not included. The reasons why this is a good default have been explained at

length

elsewhere,

so we will not bother with repeating them here.

However, Rust acknowledges that ranges that are inclusive on both sides also have their uses, and therefore they are available through a slightly more verbose syntax:

#![allow(unused)] fn main() { println!("Fortran and Julia fans, rejoice!"); for i in 1..=10 { println!("i = {i}"); } }

The Rust range types are actually used for more than iteration. They accept

non-integer bounds, and they provide a contains() method to check that a value

is contained within a range. And all combinations of inclusive, exclusive, and

infinite bounds are supported by the language, even though not all of them can

be used for iteration:

- The

..infinite range contains all elements in some ordered set x..ranges start at a certain value and contain all subsequent values in the set..yand..=yranges start at the smallest value of the set and contain all values up to an exclusive or inclusive upper bound- The

Boundstandard library type can be used to cover all other combinations of inclusive, exclusive, and infinite bounds, via(Bound, Bound)tuples

Iterators

Under the hood, the Rust for loop has no special support for ranges of

integers. Instead, it operates over a pair of lower-level standard library

primitives called

Iterator and

IntoIterator.

These can be described as follows:

- A type that implements the

Iteratortrait provides anext()method, which produces a value and internally modifies the iterator object so that a different value will be produced when thenext()method is called again. After a while, a specialNonevalue is produced, indicating that all available values have been produced, and the iterator should not be used again. - A type that implements the

IntoIteratortrait “contains” one or more values, and provides aninto_iter()method which can be used to create anIteratorthat yields those inner values.

The for loop uses these mechanisms as follows:

#![allow(unused)] fn main() { fn do_something(i: i32) {} // A for loop like this... for i in 0..3 { do_something(i); } // ...is effectively translated into this during compilation: let mut iterator = (0..3).into_iter(); while let Some(i) = iterator.next() { do_something(i); } }

Readers familiar with C++ will notice that this is somewhat similar to STL iterators and C++11 range-base for loops, but with a major difference: unlike Rust iterators, C++ iterators have no knowledge of the end of the underlying data stream. That information must be queried separately, carried around throughout the code, and if you fail to handle it correctly, undefined behavior will ensue.

This difference comes at a major usability cost, to the point where after much debate, 5 years after the release of the first stable Rust version, the C++20 standard revision has finally decided to soft-deprecate standard C++ iterators in favor of a Rust-like iterator abstraction, confusingly calling it a “range” since the “iterator” name was already taken.1

Another advantage of the Rust iterator model is that because Rust iterator

objects are self-sufficient, they can implement methods that transform an

iterator object in various ways. The Rust Iterator trait heavily leverages

this possibility, providing dozens of

methods

that are automatically implemented for every standard and user-defined iterator

type, even though the default implementations can be overriden for performance.

Most of these methods consume the input iterator and produce a different iterator as an output. These methods are commonly called “adapters”. Here is an example of one of them in action:

#![allow(unused)] fn main() { // Turn an integer range into an iterator, then transform the iterator to only // yield one element every 10 elements. for i in (0..100).into_iter().step_by(10) { println!("i = {i}"); } }

One major property of these iterator adapters is that they operate lazily: transformations are performed on the fly as new iterator elements are generated, without needing to collect transformed data in intermediary collections. Because compilers are bad at optimizing out memory allocations and data movement, this way of operating is a lot better than generating temporary collections from a performance point of view, to the point where code that uses iterator adapters usually compiles down to the same assembly as an optimal hand-written while loop.

For reasons that will be explained over the next parts of this course, usage of iterator adapters is very common in idiomatic Rust code, and generally preferred over equivalent imperative programming constructs unless the latter provide a significant improvement in code readability.

Arrays and Vecs

It is not just integer ranges that can be iterated over. Two other iterable Rust

objects of major interest to numerical computing are arrays and Vecs.

They are very similar to std::array and std::vector in C++:

- The storage for array variables is fully allocated on the stack.2 In

contrast, the storage for a

Vec’s data is allocated on the heap, using the Rust equivalent ofmalloc()andfree(). - The size of an array must be known at compile time and cannot change during

runtime. In contrast, it is possible to add and remove elements to a

Vec, and the underlying backing store will be automatically resized through memory reallocations and copies to accomodate this. - It is often a bad idea to create and manipulate large arrays because they can

overflow the program’s stack (resulting in a crash) and are expensive to move

around. In contrast,

Vecs will easily scale as far as available RAM can take you, but they are more expensive to create and destroy, and accessing their contents may require an extra pointer indirection. - Because of the compile-time size constraint, arrays are generally less

ergonomic to manipulate than

Vecs. ThereforeVecshould be your first choice unless you have a good motivation for using arrays (typically heap allocation avoidance).

There are three basic ways to create a Rust array…

- Directly provide the value of each element:

[1, 2, 3, 4, 5]. - State that all elements have the same value, and how many elements there are:

[42; 6]is the same as[42, 42, 42, 42, 42, 42]. - Use the

std::array::from_fnstandard library function to initialize each element based on its position within the array.

…and Vecs supports the first two initialization method via the vec! macro,

which uses the same syntax as array literals:

#![allow(unused)] fn main() { let v = vec![987; 12]; }

However, there is no equivalent of std::array::from_fn for Vec, as it is

replaced by the superior ability to construct Vecs from either iterators or

C++-style imperative code:

#![allow(unused)] fn main() { // The following three declarations are rigorously equivalent, and choosing // between them is just a matter of personal preference. // Here, we need to tell the compiler that we're building a Vec, but we can let // it infer the inner data type. let v1: Vec<_> = (123..456).into_iter().collect(); let v2 = (123..456).into_iter().collect::<Vec<_>>(); let mut v3 = Vec::with_capacity(456 - 123 + 1); for i in 123..456 { v3.push(i); } assert_eq!(v1, v2); assert_eq!(v1, v3); }

In the code above, the Vec::with_capacity constructor plays the same role as

the reserve() method of C++’s std::vector: it lets you tell the Vec

implementation how many elements you expect to push() upfront, so that said

implementation can allocate a buffer of the right length from the beginning and

thus avoid later reallocations and memory movement on push().

And as hinted during the beginning of this section, both arrays and Vecs

implement IntoIterator, so you can iterate over their elements:

#![allow(unused)] fn main() { for elem in [1, 3, 5, 7] { println!("{elem}"); } }

Indexing

Following the design of most modern programming languages, Rust lets you access array elements by passing a zero-based integer index in square brackets:

#![allow(unused)] fn main() { let arr = [9, 8, 5, 4]; assert_eq!(arr[2], 5); }

However, unlike in C/++, accessing arrays at an invalid index does not result in undefined behavior that gives the compiler license to arbitrarily trash your program. Instead, the thread will just deterministically panic, which by default will result in a well-controlled program crash.

Unfortunately, this memory safety does not come for free. The compiler has to insert bounds-checking code, which may or may not later be removed by its optimizer. When they are not optimized out, these bound checks tend to make array indexing a fair bit more expensive from a performance point of view in Rust than in C/++.

And this is actually one of the many reasons to prefer iteration over manual

array and Vec indexing in Rust. Because iterators access array elements using a

predictable and known-valid pattern, they can work without bound checks.

Therefore, they can be used to achieve C/++-like performance, without relying on

faillible compiler optimizations or unsafe code in your program.3 And

another major benefit is obviously that you cannot crash your program by using

iterators wrong.

But for those cases where you do need some manual indexing, you will likely

enjoy the enumerate() iterator adapter, which gives each iterator element an

integer index that starts at 0 and keeps growing. It is a very convenient tool

for bridging the iterator world with the manual indexing world:

#![allow(unused)] fn main() { // Later in the course, you will learn a better way of doing this let v1 = vec![1, 2, 3, 4]; let v2 = vec![5, 6, 7, 8]; for (idx, elem) in v1.into_iter().enumerate() { println!("v1[{idx}] is {elem}"); println!("v2[{idx}] is {}", v2[idx]); } }

Slicing

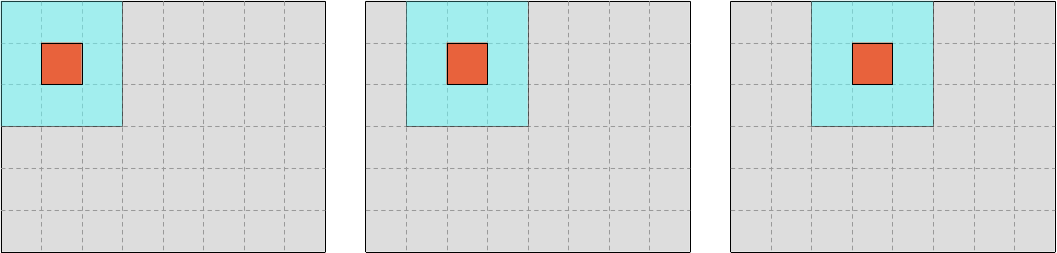

Sometimes, you need to extract not just one array element, but a subset of array elements. For example, in the Gray-Scott computation that we will be working on later on in the course, you will need to work on sets of 3 consecutive elements from an input array.

The simplest tool that Rust provides you to deal with this situation is slices, which can be built using the following syntax:

#![allow(unused)] fn main() { let a = [1, 2, 3, 4, 5]; let s = &a[1..4]; assert_eq!(s, [2, 3, 4]); }

Notice the leading &, which means that we take a reference to the original

data (we’ll get back to what this means in a later chapter), and the use of

integer ranges to represent the set of array indices that we want to extract.

If this reminds you of C++20’s std::span, this is no coincidence. Spans are

another of many instances of C++20 trying to catch up with Rust features from 5

years ago.

Manual slice extraction comes with the same pitfalls as manual indexing (costly bound checks, crash on error…), so Rust obviously also provides iterators of slices that don’t have this problem. The most popular ones are…

chunks()andchunk_exact(), which cut up your array/vec into a set of consecutive slices of a certain length and provide an iterator over these slices.- For example,

chunks(2)would yield elements at indices0..2,2..4,4..6, etc. - They differ in how they handle trailing elements of the array.

chunks_exact()compiles down to more efficient code, but is a bit more cumbersome to use because you need to handle trailing elements using a separate code path.

- For example,

windows(), where the iterator yields overlapping slices, each shifted one array/vec element away from the previous one.- For example,

windows(2)would yield elements at indices0..2,1..3,2..4, etc. - This is exactly the iteration pattern that we need for discrete convolution, which the school’s flagship Gray-Scott reaction computation is an instance of.

- For example,

All these methodes are not just restricted to arrays and Vecs, you can just as

well apply them to slices, because they are actually methods of the slice

type to begin with. It just

happens that Rust, through some compiler magic,4 allows you to call slice

type methods on arrays and Vecs, as if they were the equivalent

all-encompassing &v[..] slice.

Therefore, whenever you are using arrays and Vecs, the documentation of the

slice type is also worth keeping around. Which is why the official documentation

helps you at this by copying it into the documentation of the

array and

Vec types.

Exercise

Now, go to your code editor, open the examples/03-looping.rs source file, and

address the TODOs in it. The code should compile and runs successfully at the

end.

To attempt to compile and run the file after making corrections, you may use the following command in the VSCode terminal:

cargo run --example 03-looping

It may be worth pointing out that replacing a major standard library abstraction like this in a mature programming language is not a very wise move. 4 years after the release of C++20, range support in the standard library of major C++ compilers is still either missing or very immature and support in third-party C++ libraries is basically nonexistent. Ultimately, C++ developers will unfortunately be the ones paying the price of this standard commitee decision by needing to live with codebases that confusingly mix and match STL iterators and ranges for many decades to come. This is just one little example, among many others, of why attempting to iteratively change C++ in the hope of getting it to the point where it matches the ergonomics of Rust, is ultimately a futile evolutionary dead-end that does the C++ community more harm than good…

When arrays are used as e.g. struct members, they are allocated

inline, so for example an array within a heap-allocated struct is part

of the same allocation as the hosting struct.

Iterators are themselves implemented using unsafe, but that’s the

standard library maintainers’ problem to deal with, not yours.

Cough cough

Deref trait cough

cough.

Squaring

As you could see if you did the previous set of exercises, we have already covered enough Rust to start doing some actually useful computations.

There is still one important building block that we are missing to make the most of Rust iterator adapters, however, and that is anonymous functions, also known as lambda functions or lexical closures in programming language theory circles.

In this chapter, we will introduce this language feature, and show how it can be used, along with Rust traits and the higher-order function pattern, to compute the square of every element of an array using fully idiomatic Rust code.

Meet the lambda

In Rust, you can define a function anywhere, including inside of another

function1. Parameter types are specified using the same syntax as variable

type ascription, and return types can be specified at the end after a -> arrow

sign:

#![allow(unused)] fn main() { fn outsourcing(x: u8, y: u8) { fn sum(a: u8, b: u8) -> u8 { // Unlike in C/++, return statements are unnecessary in simple cases. // Just write what you want to return as a trailing expression. a + b } println!("{}", sum(x, y)); } }

However, Rust is not Python, and inner function definitions cannot capture variables from the scope of outer function definitions. In other words, the following code does not compile:

#![allow(unused)] fn main() { fn outsourcing(x: u8, y: u8) { fn sum() -> u8 { // ERROR: There are no "x" and "y" variables in this scope. x + y } println!("{}", sum()); } }

Rust provides a slightly different abstraction for this, namely anonymous functions aka lambdas aka closures. In addition to being able to capture surrounding variables, these also come with much lighter-weight syntax for simple use cases…

#![allow(unused)] fn main() { fn outsourcing(x: u8, y: u8) { let sum = || x + y; // Notice that the "-> u8" return type is inferred. // If you have parameters, their type is also inferred. println!("{}", sum()); } }

…while still supporting the same level of type annotation sophistication as full function declarations, should you need it for type inference or clarity:

#![allow(unused)] fn main() { fn outsourcing(x: u8, y: u8) { let sum = |a: u8, b: u8| -> u8 { a + b }; println!("{}", sum(x, y)); } }

The main use case for lambda functions, however, is interaction with higher-order functions: functions that take other functions as inputs and/or return other functions as output.

A glimpse of Rust traits

We have touched upon the notion of traits several time before in this course, without taking the time to really explain it. That’s because Rust traits are a complex topic, which we do not have the luxury of covering in depth in this short 1 day course.

But now that we are getting to higher-order functions, we are going to need to interact a little bit more with Rust traits, so this is a good time to expand a bit more on what Rust traits do.

Traits are the cornerstone of Rust’s genericity and polymorphism system. They let you define a common protocol for interacting with several different types in a homogeneous way. If you are familiar with C++, traits in Rust can be used to replace any of the following C++ features:

- Virtual methods and overrides

- Templates and C++20 concepts, with first-class support for the “type trait” pattern

- Function and method overloading

- Implicit conversions

The main advantage of having one single complex general-purpose language feature like this, instead of many simpler narrow-purpose features, is that you do not need to deal with interactions between the narrow-purpose features. As C++ practicioners know, these can be result in quite surprising behavior and getting their combination right is a very subtle art.

Another practical advantage is that you will less often hit a complexity wall, where you hit the limits of the particular language feature that you were using and must rewrite large chunks code in terms of a completely different language feature.

Finally, Rust traits let you do things that are impossible in C++. Such as adding methods to third-party types, or verifying that generic code follows its intended API contract.

If you are a C++ practicioner and just started thinking "hold on, weren't C++20 concepts supposed to fix this generics API contract problem?", please click on the arrow for a full explanation.

Let us assume that you are writing a generic function and claim that it works with any type that has an addition operator. The Rust trait system will check that this is indeed the case as the generic code is compiled. Should you use any other type operation like, say, the multiplication operator, the compiler will error out during the compilation of the generic code, and ask you to either add this operation to the generic function’s API contract or remove it from its implementation.

In contrast, C++20 concepts only let you check that the type parameters that generic code is instantiated with match the advertised contract. Therefore, in our example scenario, the use of C++20 concepts will be ineffective, and the compilation of the generic code will succeed in spite of the stated API contract being incorrect.

It is only later, as someone tries to use your code with a type that has an addition operator but no multiplication operator (like, say, a linear algebra vector type that does not use

operator*for the dot product), that an error will be produced deep inside of the implementation of the generic code.The error will point at the use of the multiplication operator by the implementation of the generic code. Which may be only remotely related to what the user is trying to do with your library, as your function may be a small implementation detail of a much bigger functionality. It may thus take users some mental gymnastics to figure out what’s going on. This is part of why templates have a bad ergonomics reputation in C++, the other part being that function overloading as a programming language feature is fundamentally incompatible with good compiler error messages.

And sadly this error is unlikely to be caught during your testing because generic code can only be tested by instantitating it with specific types. As an author of generic code, you are unlikely to think about types with an addition operator but no multiplication operator, since these are relatively rare in programming.

To summarize, unlike C++20 concepts, Rust traits are actually effective at making unclear compiler error messages deep inside of the implementation of generic code a thing of the past. They do not only work under the unrealistic expectation that authors of generic code are perfectly careful to type in the right concepts in the signature of generic code, and to keep the unchecked concept annotations up to date as the generic code’s implementation evolves2.

Higher order functions

One of Rust’s most important traits is the

Fn trait, which is

implemented for types that can be called like a function. It also has a few

cousins that we will cover later on.

Thanks to special treatment by the compiler3, the Fn trait is actually a

family of traits that can be written like a function signature, without

parameter names. So for example, an object of a type that implements the

Fn(i16, f32) -> usize trait can be called like a function, with two parameters

of type i16 and f32, and the call will return a result of type usize.

You can write a generic function that accepts any object of such a type like this…

#![allow(unused)] fn main() { fn outsourcing(op: impl Fn(i16, f32) -> usize) { println!("The result is {}", op(42, 4.2)); } }

…and it will accept any matching callable object, including both regular functions, and closures:

#![allow(unused)] fn main() { fn outsourcing(op: impl Fn(i16, f32) -> usize) { println!("The result is {}", op(42, 6.66)); } // Example with a regular function fn my_op(x: i16, y: f32) -> usize { (x as f32 + 1.23 * y) as usize } outsourcing(my_op); // Example with a closure outsourcing(|x, y| { println!("x may be {x}, y may be {y}, but there is only one answer"); 42 }); }

As you can see, closures shine in this role by keeping the syntax lean and the code more focused on the task at hand. Their ability to capture environment can also be very powerful in this situation, as we will see in later chapters.

You can also use the impl Trait syntax as the return type of a function, in

order to state that you are returning an object of a type that implements a

certain trait, without specifying what the trait is.

This is especially useful when working with closures, because the type of a closure object is a compiler-internal secret that cannot be named by the programmer:

#![allow(unused)] fn main() { /// Returns a function object with the signature that we have seen so far fn make_op() -> impl Fn(i16, f32) -> usize { |x, y| (x as f32 + 1.23 * y) as usize } }

By combining these two features, Rust programmers can very easily implement any

higher-order function that takes a function as a parameter or returns a

function as a result. And because the code of these higher-order functions is

specialized for the specific function type that you’re dealing with at compile

time, runtime performance can be much better than when using dynamically

dispatched higher-order function abstractions in other languages, like

std::function in C++4.

Squaring numbers at last

The Iterator trait provides a number of methods that are actually higher-order

functions. The simpler of them is the map method, which consumes the input

iterator, takes a user-provided function, and produces an output iterator whose

elements are the result of applying the user-provided function to each element

of the input iterator:

#![allow(unused)] fn main() { let numbers = [1.2f32, 3.4, 5.6]; let squares = numbers.into_iter() .map(|x| x.powi(2)) .collect::<Vec<_>>(); println!("{numbers:?} squared is {squares:?}"); }

And thanks to good language design and heroic optimization work by the Rust compiler team, the result will be just as fast as hand-optimized assembly for all but the smallest input sizes5.

Exercise

Now, go to your code editor, open the examples/04-square-one.rs source file,

and address the TODOs in it. The code should compile and runs successfully at

the end.

To attempt to compile and run the file after making corrections, you may use the following command in the VSCode terminal:

cargo run --example 04-square-one

This reflects a more general Rust design choice of letting almost everything be declared almost anywhere, for example Rust will happily declaring types inside of functions, or even inside of value expressions.

You may think that this is another instance of the C++ standardization commitee painstakingly putting together a bad clone of a Rust feature as an attempt to play catch-up 5 years after the first stable release of Rust. But that is actually not the case. C++ concepts have been in development for more than 10 years, and were a major contemporary inspiration for the development of Rust traits along with Haskell’s typeclasses. However, the politically dysfunctional C++ standardization commitee failed to reach an agreement on the original vision, and had to heavily descope it before they succeeded at getting the feature out of the door in C++20. In contrast, Rust easily succeeded at integrating a much more ambitious generics API contract system into the language. This highlights once again the challenges of integrating major changes into an established programming language, and why the C++ standardization commitee might actually better serve C++ practicioners by embracing the “refine and polish” strategy of its C and Fortran counterparts.

There are a number of language entities like this that get special treatment by the Rust compiler. This is done as a pragmatic alternative to spending more time designing a general version that could be used by library authors, but would get it in the hands of Rust developers much later. The long-term goal is to reduce the number of these exceptions over time, in order to give library authors more power and reduce the amount of functionality that can only be implemented inside of the standard library.

Of course, there is no free lunch in programming, and all this

compile-time specialization comes at the cost. As with C++ templates, the

compiler must effectively recompile higher-order functions that take

functions as a parameter for each input function that they’re called with.

This will result in compilation taking longer, consuming more RAM, and

producing larger output binaries. If this is a problem and the runtime

performance gains are not useful to your use case, you can use dyn Trait

instead of impl Trait to switch to dynamic dispatch, which works much

like C++ virtual methods. But that is beyond the scope of this short

course.

To handle arrays smaller than about 100 elements optimally, you will need to specialize the code for the input size (which is possible but beyond the scope of this course) and make sure the input data is optimally aligned in memory for SIMD processing (which we will cover later on).

Hadamard

So far, we have been iterating over a single array/Vec/slice at a time. And

this already took us through a few basic computation patterns. But we must not

forget that with the tools introduced so far, jointly iterating over two Vecs

at the same time still involves some pretty yucky code:

#![allow(unused)] fn main() { let v1 = vec![1, 2, 3, 4]; let v2 = vec![5, 6, 7, 8]; for (idx, elem) in v1.into_iter().enumerate() { println!("v1[{idx}] is {elem}"); // Ew, manual indexing with bound checks and panic risks... :( println!("v2[{idx}] is {}", v2[idx]); } }

Thankfully, there is an easy fix called zip(). Let’s use it to implement the

Hadamard product!

Combining iterators with zip()

Iterators come with an adapter method called zip(). Like for loops, this

method expects an object of a type that implements IntoInterator. What it does

is to consume the input iterator, turn the user-provided object into an

interator, and return a new iterator that yields pairs of elements from both the

original iterator and the user-provided iterable object:

#![allow(unused)] fn main() { let v1 = vec![1, 2, 3, 4]; let v2 = vec![5, 6, 7, 8]; /// Iteration will yield (1, 5), (2, 6), (3, 7) and (4, 8) for (x1, x2) in v1.into_iter().zip(v2) { println!("Got {x1} from v1 and {x2} from v2"); } }

Now, if you have used the zip() method of any other programming language for

numerics before, you should have two burning questions:

- What happens if the two input iterators are of different length?

- Is this really as performant as a manual indexing-based loop?

To the first question, other programming languages have come with three typical answers:

- Stop when the shortest iterator stops, ignoring remaining elements of the other iterator.

- Treat this as a usage error and report it using some error handling mechanism.

- Make it undefined behavior and give the compiler license to randomly trash the program.

As you may guess, Rust did not pick the third option. It could reasonably have

picked the second option, but instead it opted to pick the first option. This

was likely done because error handling comes at a runtime performance cost, that

was not felt to be acceptable for this common performance-sensitive operation

where user error is rare. But should you need it, option 2 can be easily built

as a third-party library, and is therefore available via the popular

itertools crate.

Speaking of performance, Rust’s zip() is, perhaps surprisingly, usually just

as good as a hand-tuned indexing loop1. It does not exhibit the runtime

performance issues that were discussed in the C++ course when presenting C++20’s

range-zipping operations2. And it will especially be often highly superior to

manual indexing code, which come with a risk of panics and makes you rely on the

black magic of compiler optimizations to remove indexing-associated bound

checks. Therefore, you are strongly encouraged to use zip() liberally in your

code!

Hadamard product

One simple use of zip() is to implement the Hadamard vector product.

This is one of several different kinds of products that you can use in linear algebra. It works by taking two vectors of the same dimensionality as input, and producing a third vector of the same dimensionality, which contains the pairwise products of elements from both input vectors:

#![allow(unused)] fn main() { fn hadamard(v1: Vec<f32>, v2: Vec<f32>) -> Vec<f32> { assert_eq!(v1.len(), v2.len()); v1.into_iter().zip(v2) .map(|(x1, x2)| x1 * x2) .collect() } assert_eq!( hadamard(vec![1.2, 3.4, 5.6], vec![9.8, 7.6, 5.4]), [ 1.2 * 9.8, 3.4 * 7.6, 5.6 * 5.4 ] ); }

Exercise

Now, go to your code editor, open the examples/05-hadamantium.rs source file,

and address the TODOs in it. The code should compile and runs successfully at

the end.

To attempt to compile and run the file after making corrections, you may use the following command in the VSCode terminal:

cargo run --example 05-hadamantium

This is not to say that the hand-tuned indexing loop is itself perfect. It will inevitably suffer from runtime performance issues caused by suboptimal data alignment. But we will discuss how to solve this problem and achieve optimal Hadamard product performance after we cover data reductions, which suffer from much more severe runtime performance problems that include this one among many others.

The most likely reason why this is the case is that Rust pragmatically

opted to make tuples a primitive language type that gets special support

from the compiler, which in turn allows the Rust compiler to give its LLVM

backend very strong hints about how code should be generated (e.g. pass

tuple elements via CPU registers, not stack pushes and pops that may or

may not be optimized out by later passes). On its side, the C++

standardization commitee did not do this because they cared more about

keeping std::tuple a library-defined type that any sufficiently

motivated programmer could re-implement on their own given an infinite

amount of spare time. This is another example, if needed be, that even

though both the C++ and Rust community care a lot about giving maximal

power to library writers and minimizing the special nature of their

respective standard libraries, it is important to mentally balance the

benefits of this against the immense short-term efficiency of letting the

compiler treat a few types and functions specially. As always, programming

language design is all about tradeoffs.

Sum and dot

All the computations that we have discussed so far are, in the jargon of SIMD programming, vertical operations. For each element of the input arrays, they produce zero or one matching output array element. From the perspective of software performance, these vertical operations are the best-case scenario, and compilers know how to produce very efficient code out of them without much assistance from the programmer. Which is why we have not discussed performance much so far.

But in this chapter, we will now switch our focus to horizontal operations, also known as reductions. We will see why these operations are much more challenging to compiler optimizers, and then the next few chapters will cover what programmers can do to make them more efficient.

Summing numbers

One of the simplest reduction operations that one can do with an array of floating-point numbers is to compute the sum of the numbers. Because this is a common operation, Rust iterators make it very easy by providing a dedicated method for it:

#![allow(unused)] fn main() { let sum = [1.2, 3.4, 5.6, 7.8].into_iter().sum::<f32>(); }

The only surprising thing here might be the need to spell out the type of the sum. This need does not come up because the compiler does not know about the type of the array elements that we are summing. That could be handled by the default “unknown floats are f64” fallback.

The problem is instead that the sum function is generic in order to be able to work with both numbers and reference to numbers (which we have not covered yet, but will soon enough, for now think of them as C pointers). And wherever there is genericity in Rust, there is loss of type inference.

Dot product

Once we have zip(), map() and sum(), it takes only very little work to

combine them in order to implement a simple Euclidean dot product:

#![allow(unused)] fn main() { let x = [1.2f32, 3.4, 5.6, 7.8]; let y = [9.8, 7.6, 5.4, 3.2]; let dot = x.into_iter().zip(y) .map(|(x, y)| x * y) .sum::<f32>(); }

Hardware-minded people, however, may know that we are leaving some performance and floating-point precision on the table by doing it like this. Modern CPUs come with fused multiply-add operations that are as costly as an addition or multiplication, and do not round between the multiplication and addition which results in better output precision.

Rust exposes these hardware operations1, and we can use them by switching to

a more general cousin of sum() called fold():

#![allow(unused)] fn main() { let x = [1.2f32, 3.4, 5.6, 7.8]; let y = [9.8, 7.6, 5.4, 3.2]; let dot = x.into_iter().zip(y) .fold(0.0, |acc, (x, y)| x.mul_add(y, acc)); }

Fold works by initializing an accumulator variable with a user-provided value, and then going through each element of the input iterator and integrating it into the accumulator, each time producing an updated accumulator. In other words, the above code is equivalent to this:

#![allow(unused)] fn main() { let x = [1.2f32, 3.4, 5.6, 7.8]; let y = [9.8, 7.6, 5.4, 3.2]; let mut acc = 0.0; for (x, y) in x.into_iter().zip(y) { acc = x.mul_add(y, acc); } let dot = acc; }

And indeed, the iterator fold method optimizes just as well as the above imperative code, resulting in identical generate machine code. The problem is that unfortunately, that imperative code itself is not ideal and will result in very poor computational performance. We will now show how to quantify this problem, and then explain why it happens and what you can do about it.

Setting up criterion

The need for criterion

With modern hardware, compiler and operating systems, measuring the performance of short-running code snippets has become a fine art that requires a fair amount of care.

Simply surrounding code with OS timer calls and subtracting the readings may have worked well many decades ago. But nowadays it is often necessary to use specialized tooling that leverages repeated measurements and statistical analysis, in order to get stable performance numbers that truly reflect the code’s performance and are reproducible from one execution to another.

The Rust compiler has built-in tools for this, but unfortunately they are not

fully exposed by stable versions at the time of writing, as there is a

longstanding desire to clean up and rework some of the associated APIs before

exposing them for broader use. As a result, third-party libraries should be used

for now. In this course, we will be mainly using

criterion as it is

by far the most mature and popular option available to Rust developers

today.2

Adding criterion to a project

Because criterion is based on the Rust compiler’s benchmarking infrastructure,

but cannot fully use it as it is not completely stabilized yet, it does

unfortunately require a fair bit of unclean setup. First you must add a

dependency on criterion in your Rust project. For network policy reasons, we

have already done this for you in the example source code. But for your

information, it is done using the following command:

cargo add --dev criterion

cargo add is an easy-to-use tool for editing your Rust project’s Cargo.toml

configuration file in order to register a dependency on (by default) the latest

version of some library. With the --dev option, we specify that this

dependency will only be used during development, and should not be included in

production builds, which is the right thing to do for a benchmarking harness.

After this, every time we add a benchmark to the application, we will need to

manually edit the Cargo.toml configuration file in order to add an entry that

disables the Rust compiler’s built-in benchmark harness. This is done so that it

does not interfere with criterion’s work by erroring out on criterion-specific

CLI benchmark options that it does not expect. The associated Cargo.toml

configuration file entry looks like this:

[[bench]]

name = "my_benchmark"

harness = false

Unfortunately, this is not yet enough, because benchmarks can be declared pretty

much anywhere in Rust. So we must additionally disable the compiler’s built-in

benchmark harness on every other binary defined by the project. For a simple

library project that defines no extra binaries, this extra Cargo.toml

configuration entry should do it:

[lib]

bench = false

It is only after we have done all of this setup, that we can get criterion

benchmarks that will reliably accept our CLI arguments, no matter how they were

started.

A benchmark skeleton

Now that our Rust project is set up properly for benchmarking, we can start

writing a benchmark. First you need to create a benches directory in

your project if it does not already exists, and create a source file there,

named however you like with a .rs extension.

Then you must add the criterion boilerplate to this source file, which is

partially automated using macros, in order to get a runnable benchmark that

integrates with the standard cargo bench tool…

use criterion::{black_box, criterion_group, criterion_main, Criterion};

pub fn criterion_benchmark(c: &mut Criterion) {

// This is just an example benchmark that you can freely delete

c.bench_function("sqrt 4.2", |b| b.iter(|| black_box(4.2).sqrt()));

}

criterion_group!(benches, criterion_benchmark);

criterion_main!(benches);…and finally you must add the aforementioned Cargo.toml boilerplate so that

criterion CLI arguments keep working as expected. Assuming you unimaginatively

named your benchmark source file “benchmark.rs”, this would be…

[[bench]]

name = "benchmark"

harness = false

Writing a good microbenchmark

There are a few basic rules that you should always follow whenever you are writing a microbenchmark that measures the performance of a small function in your code, if you do not want the compiler’s optimizer to transform your benchmark in unrealistic ways:

- Any input value that is known to the compiler’s optimizer can be used to tune

the code specifically for this input value, and sometimes even to reduce the

benchmarking loop to a single iteration by leveraging the fact that the

computation always operate on the same input. Therefore, you must always hide

inputs from the compiler’s optimizer using an optimization barrier such as

criterion’sblack_box(). - Any output value that is not used in any way is a useless computation in the

eyes of the compiler’s optimizer. Therefore, the compiler’s optimizer will

attempt to delete any code that is involved in the computation of such values.

To avoid this, you will want again to feed results into an optimization

barrier like

criterion’sblack_box().criterionimplicitly does this for any output value that you return from theiter()API’s callback.

It’s not just about the compiler’s optimizer though. Hardware and operating systems can also leverage the regular nature of microbenchmarks to optimize performance in unrealistic ways, for example CPUs will exhibit an unusually good cache hit rate when running benchmarks that always operate on the same input values. This is not something that you can guard against, just a pitfall that you need to keep in mind when interpreting benchmark results: absolute timings are usually an overly optimistic estimate of your application’s performance, and therefore the most interesting output of microbenchmarks is actually not the raw result but the relative variations of this result when you change the code that is being benchmarked.

Finally, on a more fundamental level, you must understand that on modern

hardware, performance usually depends on problem size in a highly nonlinear and

non-obvious manner. It is therefore a good idea to test the performance of your

functions over a wide range of problem sizes. Geometric sequences of problem

sizes like [1, 2, 4, 8, 16, 32, ...] are often a good default choice.

Exercise

Due to Rust benchmark harness design choices, the exercise for this chapter

will, for once, not take place in the examples subdirectory of the exercises’

source tree.

Instead, you will mainly work on the benches/06-summit.rs source file, which

is a Criterion benchmark that was created using the procedure described above.

Implement the sum() function within this benchmark to make it sum the elements

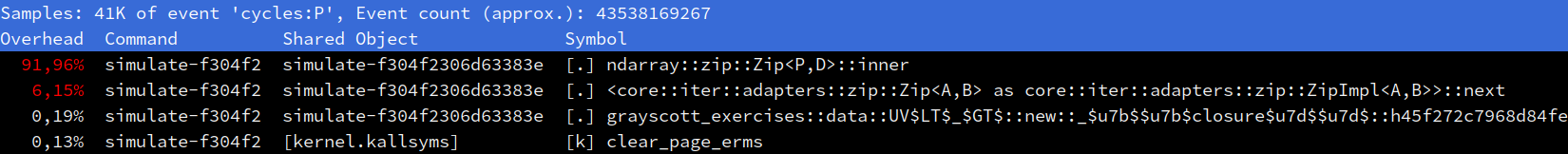

of its input Vec, then run the benchmark with cargo bench --bench 06-summit.

To correctly interpret the results, you should know that a single core of a modern x86 CPU, with a 2 GHz clock rate and AVX SIMD instructions, can perform 32 billion f32 sums per second.3

Technically, at this stage of our performance optimization journey, we can

only use these operations via a costly libm function call. This happens

because not all x86_64 CPUs support the fma instruction family, and the

compiler has to be conservative in order to produce code that runs

everywhere. Later, we will see how to leverage modern hardware better

using the multiversion crate.

Although criterion would be my recommendation today due to its

feature-completeness and popularity, divan is

quickly shaping up into an interesting alternative that might make it the

recommendation in a future edition of this course. Its benefits over

criterion include significantly improved API ergonomics, faster

measurements, and better support for code that is generic over

compile-time quantities aka “const generics”.

Recent Intel CPUs (as of 2024) introduced the ability to perform a third SIMD sum per clock cycle, which bumps the theoretical limit to 48 billion f32 sums per second per 2 GHz CPU core.

Ownership

If you played with the example float squaring benchmark of the last chapter before replacing it with a float summing benchmark, you may have noticed that its performance was already quite bad. Half a nanosecond per vector element may not sound like much, but when we’re dealing with CPUs that can process tens of multiplications in that time, it’s already something to be ashamed of.